In the past, face recognition mainly included technologies and systems such as face image collection, face recognition preprocessing, identity confirmation, and identity search. Now face recognition has slowly extended to driver detection, pedestrian tracking, and even dynamic object tracking in ADAS. It can be seen that the face recognition system has evolved from simple image processing to real-time video processing. Moreover, the algorithm has been transformed from traditional statistical methods such as Adaboots and PCA to deep learning and modified methods such as CNN and RCNN. Nowadays, a considerable number of people have begun to study 3D face recognition. This kind of project is currently supported by academia, industry and the country.

First look at the current research status. As can be seen from the above development trend, the main research direction now is to use deep learning methods to solve video face recognition.

The main researchers are as follows: Professor Shan Shiguang of the Institute of Computing Technology of the Chinese Academy of Sciences, Professor Li Ziqing of the Institute of Biometrics of the Chinese Academy of Sciences, Professor Su Guangda of Tsinghua University, Professor Tang Xiaoou of the Chinese University of Hong Kong, Ross B. Girshick, etc.

Main open source projects:

SeetaFace face recognition engine. The engine was developed by the face recognition research group led by researcher Shan Shiguang from the Institute of Computing Technology, Chinese Academy of Sciences. The code is based on C++++ and does not depend on any third-party library functions. The open source protocol is BSD-2, which can be used by academia and industry for free. github link:

https://github.com/seetaface/SeetaFaceEngine

Main software API/SDK:

face++. Face++.com is a cloud service platform that provides free face detection, face recognition, face attribute analysis and other services. Face++ is a brand-new face technology cloud platform under Beijing Megvii Technology Co., Ltd. In the Dark Horse Competition, Face++ won the annual championship and has received Lenovo Star Investment.

skybiometry.. It mainly includes face detection, face recognition, and face grouping.

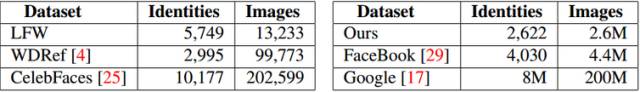

The main face recognition image library: The relatively good face image libraries currently open include LFW (Labelled Faces in the Wild) and YFW (Youtube Faces in the Wild). The current experimental data set is basically derived from LFW, and the current image face recognition accuracy has reached 99%, basically the existing image database has been maxed out. The following is a summary of the existing face image database:

Now there are more and more companies doing face recognition in China, and the applications are also very extensive. Among them, Hanwang Technology has the highest market share. The research directions and current status of major companies are as follows:

Hanwang Technology: Hanwang Technology is mainly used for face recognition authentication, mainly used in access control systems, attendance systems, and so on.

IFLYTEK: With the support of Professor Tang Xiaoou from the Chinese University of Hong Kong, iFLYTEK has developed a face recognition technology based on Gaussian process—Gussian face. The recognition rate of this technology on LFW is 98.52%. The company’s DEEPID2 is currently The recognition rate on LFW has reached 99.4%.

Sichuan University Zhisheng: The current highlight of the company's research is 3D face recognition, and it has expanded to the industrialization of 3D full-face cameras and so on.

SenseTime: It is mainly a company dedicated to leading the breakthrough of the core "deep learning" technology of artificial intelligence and building artificial intelligence and big data analysis industry solutions. It is currently engaged in face recognition, text recognition, human body recognition, vehicle recognition, and object recognition. , Image processing and other directions have strong competitiveness. In face recognition, there are 106 key points of face recognition.

The process of face recognition

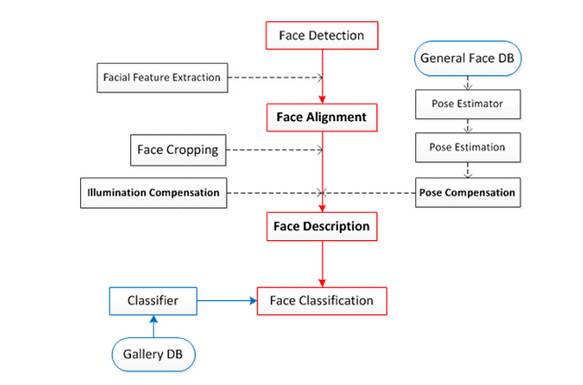

Face recognition is mainly divided into four parts: face detection, face alignment, face verification, and face identification.

Face detection (face detection): Detect the face in the image and frame the result with a rectangular frame. There is a Harr classifier that can be used directly in openCV.

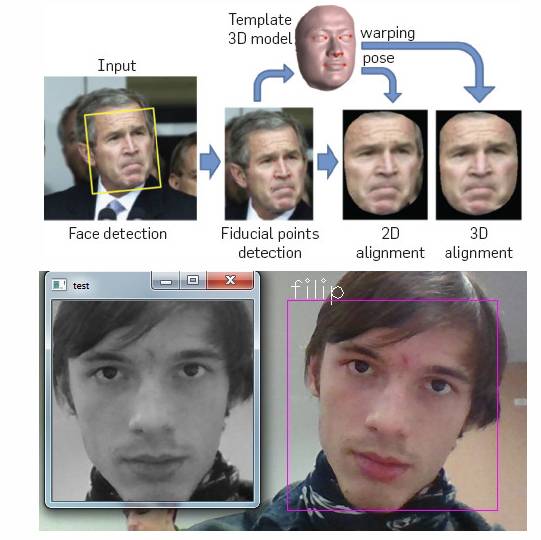

Face alignment (face alignment): Perform posture correction on the detected face to make the face as "positive" as possible, and the accuracy of face recognition can be improved through correction. The correction methods include 2D correction and 3D correction. The 3D correction method can make the side face better recognized. When performing face correction, there will be a step of detecting the position of the feature points. These feature points are mainly located on the left side of the nose, the underside of the nostril, the position of the pupil, the underside of the upper lip and so on. Know the position of these feature points. After the position, do a position-driven deformation, and the face can be "corrected". As shown below:

Here is a 14-year technology of MSRA: Joint Cascade Face Detection and Alignment (ECCV14). This article directly did the detection and alignment in 30ms.

Face verification:

Face verification, face verification is based on pair matching, so the answer it gets is "yes" or "no". In the specific operation, a test image is given, and then pair matching is performed one by one. If matching is displayed, it means that the test image and the matched face are the same person's face. Generally, this method is used (should) be used in the face-swiping and punch-in system of small offices. The specific operation method is roughly like this process: enter the face photos of employees one by one offline (usually more than one face entered by an employee) , After the employee captures the image by the camera when he swipes his face and punches in the card, he will perform face detection first, then face correction, and then face verification as described above. Once the match result is "Yes", it means that the name is swiped The staff of the face belong to this office, and the face verification is completed at this step. When entering an employee's face offline, we can associate the face with the person's name, so that once the face verification is successful, we can know who the person is. The advantage of such a system mentioned above is that the development cost is low and it is suitable for small office spaces. The disadvantage is that it cannot be blocked when capturing, and it also requires a relatively positive facial posture (this system is owned by us, but I have not experienced it). The following figure gives a schematic description:

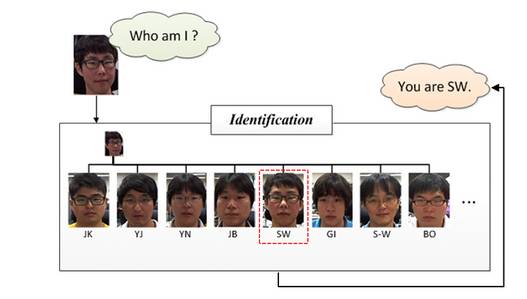

Face identification (face identification/recognition): Face identification or Face recognition, as shown in the figure below, it answers "Who am I?", compared to the pair matching used in face verification, It uses more classification methods in the recognition stage. It actually classifies the image (face) after the previous two steps, namely face detection and face correction.

According to the introduction of the above four concepts, we can understand that face recognition mainly includes three large and independent modules:

We split the above steps in detail to get the following process diagram:

Face recognition classification

Now with the development of face recognition technology, face recognition technology is mainly divided into three categories: one is image-based recognition method, the other is video-based recognition method, and the third is three-dimensional face recognition method.

Image-based recognition method: This process is a static image recognition process, mainly using image processing. The main algorithms are PCA, EP, kernel method, Bayesian Framwork, SVM, HMM, Adaboot, etc. algorithms. But in 2014, face recognition made a major breakthrough using Deep learning technology, represented by 97.25% of deepface and 97.27% of face++, but the training set of deep face is 400w, and at the same time, Gussian of Tang Xiaoou of Chinese University of Hong Kong The training set of face is 2w.

Video-based real-time recognition method: This process shows that the tracking process of face recognition not only requires finding the position and size of the face in the video, but also determining the correspondence between different faces between frames.

DeepFace

Reference papers (data): 1. DeepFace papers. DeepFace: Closing the Gap to Human-level Performance in Face Verificaion 2. Convolutional neural network understanding blog. http://blog.csdn.net/zouxy09/article/details/87815433. Derivation blog of convolutional neural networks. http://blog.csdn.net/zouxy09/article/details/9993371/4.Note on convolution Neural Network.5.Neural Network for Recognition of Handwritten Digits6. DeepFace blog post: http://blog.csdn.net/Hao_Zhang_Vision /article/details/52831399?locationNum=2&fps=1

DeepFace was proposed by FaceBook, followed by DeepID and FaceNet. And DeepFace can be reflected in DeepID and FaceNet, so DeepFace can be said to be the foundation of CNN in face recognition. At present, deep learning has also achieved very good results in face recognition. So here we start learning from DeepFace.

In the learning process of DeepFace, not only the method used by DeepFace will be introduced, but also other main algorithms of the current step will be introduced to give a simple and comprehensive description of the existing image face recognition technology.

1. The basic framework of DeepFace

1.1 The basic process of face recognition

face detection -> face alignment -> face verification -> face identification

1.2 Face detection (face detection)

1.2.1 Existing technology:

Haar classifier: Face detection (detection) has a haar classifier that can be used directly in opencv for a long time, based on the Viola-Jones algorithm.

Adaboost algorithm (cascade classifier): 1. Reference paper: Robust Real-Time face detection. 2. Refer to the Chinese blog: http://blog.csdn.net/cyh_24/article/details/397556613. Blog: http://blog.sina.com.cn/s/blog_7769660f01019ep0.html

1.2.2 Methods used in the article

In this paper, a face detection method based on detection points (fiducial Point Detector) is used.

First select 6 reference points, 2 eye centers, 1 nose point, and 3 mouth points.

Use SVR to learn the reference point through the LBP feature.

The effect is as follows:

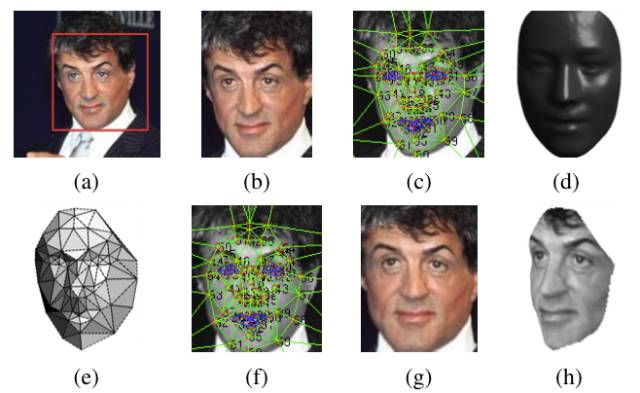

1.3 Face alignment

2D alignment:

Perform two-dimensional cropping on the image after Detection, scale, rotate and translate the image into six anchor locations. Cut out part of the face.

3D alignment:

Find a 3D model and use this 3D model to crop a two-dimensional face into a 3D face. 67 base points, then Delaunay triangulation, adding triangles to the outline to avoid discontinuities.

Convert the triangulated face into a 3D shape

The triangulated face becomes a deep 3D triangle net

Deflection of the triangulation so that the front of the face is facing forward

The last face

The effect is as follows:

The 2D alignment above corresponds to Figure (b), and the 3D alignment corresponds to (c) ~ (h) in turn.

1.4 Face verification

1.4.1 Existing technology

LBP && joint Beyesian: Combine the two methods of high-dimensional LBP with Joint Bayesian.

Paper: Bayesian Face Revisited: A Joint Formulation

DeepID series: Use SVM to fuse seven joint Bayesian models with an accuracy of 99.15%

Paper: Deep Learning Face Representation by Joint Identification-Verification

1.4.2 Methods in the article

In the paper, a deep neural network (DNN) is trained through a multi-type face recognition task. The network structure is shown in the figure above.

Structural parameters: After 3D alignment, the images formed are all 152×152 images, which are input into the above network structure. The parameters of the structure are as follows:

Conv: 32 11×11×3 convolution kernels

max-pooling: 3×3, stride=2

Conv: 16 9×9 convolution kernels

Local-Conv: 16 9×9 convolution kernels, Local means that the parameters of the convolution kernel are not shared

Local-Conv: 16 7×7 convolution kernels, parameters are not shared

Local-Conv: 16 5×5 convolution kernels, parameters are not shared

Fully-connected: 4096 dimensions

Softmax: 4030 dimensions

Extract low-level features: the process is as follows:

Preprocessing stage: Input 3 channels of human face, perform 3D correction, and then normalize to 152*152 pixel size-152*152*3.

Through the convolutional layer C1: C1 contains 32 11*11*3 filters (that is, the convolution kernel), 32 feature maps-32*142*142*3 are obtained.

Through the max-polling layer M2: The sliding window size of M2 is 3*3, the sliding step is 2, and the three channels are independently polled.

Through another convolution layer C3: C3 contains 16 9*9*16 3-dimensional convolution kernels.

The above three-layer network is to extract low-level features, such as simple edge features and texture features. The Max-polling layer makes the convolutional network more robust to local transformations. If the input is a corrected face, the network can be made more robust to small marking errors. However, such a polling layer will cause the network to lose some information on the precise location of the detailed structure of the face and the minute texture. Therefore, only the Max-polling layer is added after the first convolutional layer in the article. These previous layers are called front-end adaptive pre-processing levels. However, for many calculations, this is necessary, and the parameters of these layers are actually very few. They just expand the input image into a simple set of local features.

Subsequent layers: L4, L5, and L6 are all locally connected layers. Just like the convolutional layer uses filters, a set of different filters are trained at each position of the feature image. Since different regions have different statistical characteristics after correction, the assumption of spatial stability of the convolutional network cannot be established. For example, compared to the area between the nose and the mouth, the area between the eyes and the eyebrows exhibits a very different appearance and has a high degree of discrimination. In other words, by using the input corrected image, the structure of the DNN is customized.

Using the local connection layer does not affect the computational burden of feature extraction, but it affects the number of training parameters. Just because of such a large labeled face database, we can bear three large local connection layers. The output unit of the local connection layer is affected by a large input block, and the use (parameters) of the local connection layer can be adjusted accordingly (weights are not shared)

For example, the output of the L6 layer is affected by a 74*74*3 input block. In the corrected face, it is difficult to share any statistical parameters among such large blocks.

Top layer: Finally, the two layers (F7, F8) at the top of the network are fully connected: each output unit is connected to all inputs. These two layers can capture the correlation between the features of the distant regions in the face image. For example, the relationship between the position and shape of the eyes and the position and shape of the mouth (this part also contains information) can be obtained from these two layers. The output of the first fully connected layer F7 is our original facial feature expression vector.

In terms of feature expression, this feature vector is quite different from the traditional LBP-based feature description. Traditional methods usually use local feature descriptions (computing histograms) and use them as the input of the classifier.

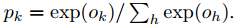

The output of the last fully connected layer F8 enters a K-way softmax (K is the number of categories), and the probability distribution of category labels can be generated. Use Ok to represent the kth output of an input image after passing through the network, and the probability of the output class label k can be expressed by the following formula:

The goal of training is to maximize the probability of the correct output category (face id). This is achieved by minimizing the cross entropy loss of each training sample. Using k to represent the label of the correct category of a given input, the cross entropy loss is:

The cross entropy loss is minimized by calculating the gradient of the cross entropy loss L to the parameter and using the method of stochastic gradient decline.

The gradient is calculated by the standard backpropagation of the error. It is very interesting that the features generated by this network are very sparse. More than 75% of the top-level feature elements are 0. This is mainly due to the use of the ReLU activation function. This soft threshold nonlinear function is used in all convolutional layers, locally connected layers and fully connected layers (except for the last layer F8), resulting in highly nonlinear and sparse features after the overall cascade. Sparsity is also related to the use of dropout regularization, that is, random feature elements are set to 0 during training. We only used dropout in the F7 fully connected layer. Due to the large training set, we did not find significant overfitting during the training process.

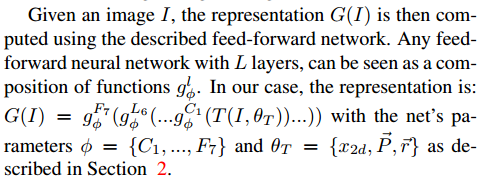

Given the image I, its characteristic expression G(I) is calculated by the feedforward network. Each L-layer feedforward network can be regarded as a series of functions:

Normalization: At the last level, we normalize the elements of the feature to 0 to 1, so as to reduce the sensitivity of the feature to changes in lighting. Each element in the feature vector is divided by the corresponding maximum value in the training set. Then perform L2 normalization. Since we adopt the ReLU activation function, the scale invariance of our system to the image is weakened.

For the output 4096-d vector:

First, each dimension is normalized, that is, for each dimension in the result vector, it must be divided by the maximum value of that dimension in the entire training set.

Each vector is L2 normalized.

2. Verification

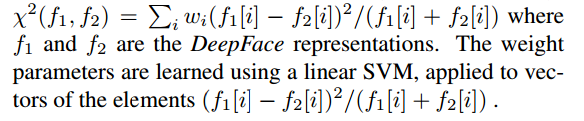

2.1 Chi-square distance

In this system, the normalized DeepFace feature vector has the following similarities with traditional histogram-based features (such as LBP):

All values ​​are negative

Very sparse

The values ​​of the characteristic elements are all in the interval [0, 1]

The formula for calculating the chi-square distance is as follows:

2.2 Siamese network

The article also mentioned the end-to-end metric learning method. Once the learning (training) is completed, the face recognition network (as of F7) reuses the two input images, and the obtained 2 feature vectors are directly used for prediction and judgment. Whether the two input pictures belong to the same person. This is divided into the following steps: a. Calculate the absolute difference between the two features; b, a fully connected layer, mapped to a single logic unit (same/different output).

3. Experimental evaluation

3.1 Data set

Social Face Classification Dataset (SFC): 4.4M faces/4030 people

LFW: 13,323 faces/5749 people

restricted: only yes/no mark

unrestricted: other training pairs are also available

unsupervised: not train on LFW

Youtube Face(YTF): 3425videos/1595 people

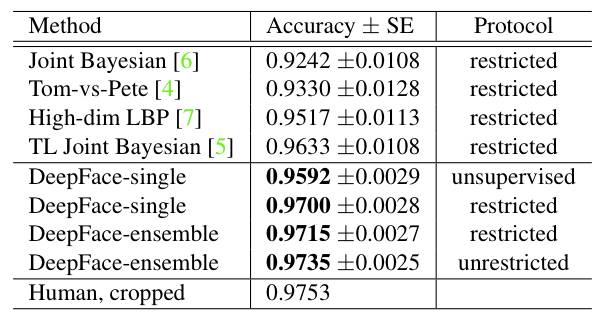

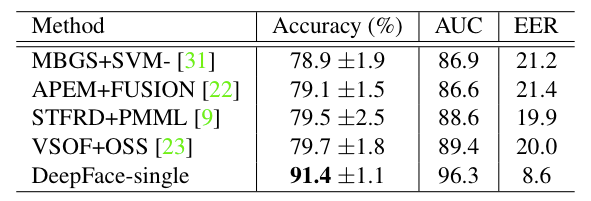

result on LFW:

result on YTF:

The biggest difference between DeepFace and the subsequent methods is that DeepFace uses an alignment method before training the neural network. The paper believes that the reason why the neural network can work is that once the face is aligned, the features of the face area are fixed on certain pixels. At this time, the convolutional neural network can be used to learn the features.

The model in this article uses the C++ toolbox dlib's latest face recognition method based on deep learning. Based on the benchmark level of the outdoor face data test library Labeled Faces in the Wild, it achieves an accuracy of 99.38%.

More algorithms

http://

dlib: http://dlib.net/

Data testing library Labeled Faces in the Wild: http://vis-

The model provides a simple face_recognition command line tool that allows users to directly use the picture folder to perform face recognition operations through commands.

Capture facial features in pictures

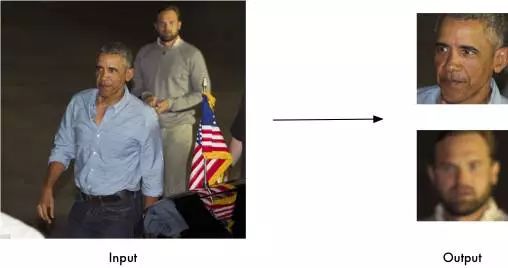

Capture all faces in one picture

Find and process the features of the face in the picture

Find the position and contour of each person's eyes, nose, mouth, and chin.

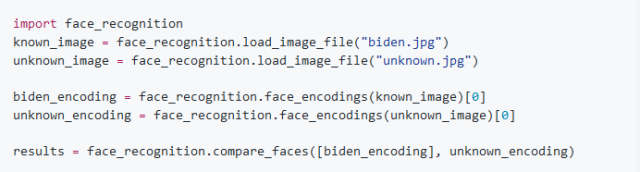

import face_recognition

image = face_recognition.load_image_file("your_file.jpg")

face_locations = face_recognition.face_locations(image)

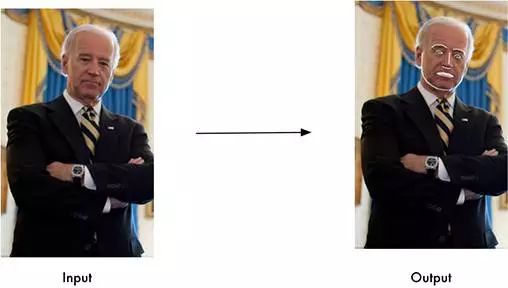

Capturing facial features has a very important purpose, of course, it can also be used for digital make-up of pictures (such as Meitu Xiuxiu)

digital make-up: https://github.com/ageitgey/face_recognition/blob/master/examples/digital_makeup.py

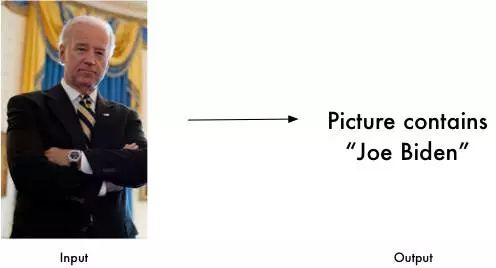

Recognize faces in pictures

Identify who appears in the photo

installation steps

This method supports Python3/python2. We have only tested it on macOS and Linux, and we don’t know if it is applicable to Windows.

Use pypi's pip3 to install this module (or Python 2's pip2)

Important note: There may be problems when compiling dlib, you can fix the error by installing dlib from source (not pip), please refer to the installation manual How to install dlib from source

https://gist.github.com/ageitgey/629d75c1baac34dfa5ca2a1928a7aeaf

Install dlib manually and run pip3 install face_recognition to complete the installation.

How to use the command line interface

When you install face_recognition, you can get a simple command line program called face_recognition, which can help you recognize a photo or all faces in a photo folder.

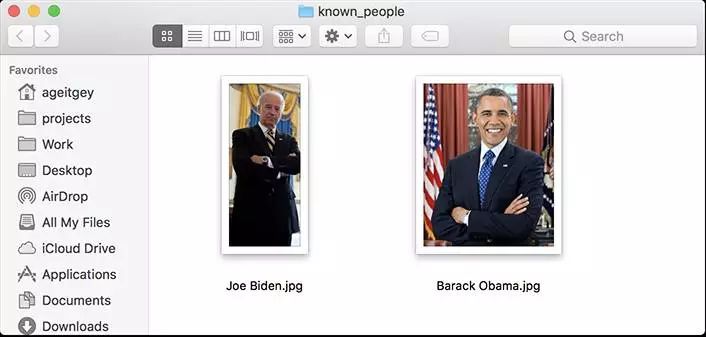

First, you need to provide a folder containing a photo, and you already know who the person in the photo is, everyone must have a photo file, and the file name needs to be named after that person;

Then you need to prepare another folder, which contains the face photos you want to recognize;

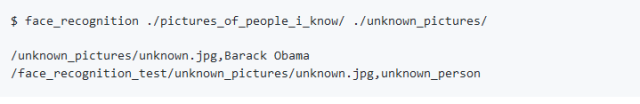

Next, you only need to run the face_recognition command, the program can identify who is in the unknown face photo through the folder of known faces;

A line of output is required for each face, and the data is the file name plus the recognized names, separated by commas.

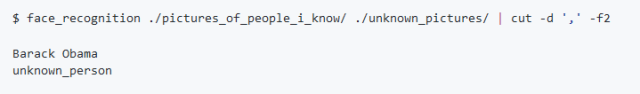

If you just want to know the name of the person in each photo and not the file name, you can do the following:

Breaker Rcbo,Rcbo Protection,Leakage Protection Rcbo,Leakage Protection Switch

ZHEJIANG QIANNA ELECTRIC CO.,LTD , https://www.traner-elec.com