Probably many companies are aware of the lack of interactions with mobile VR, and various interactive solutions have emerged this year. There is a direct positioning of the Outside in space on the machine; Leap Motion, uSens, and Micro Motion do binocular camera gesture tracking; and there is the Intel Out with Android's Inside out space positioning, Google Daydream's 3 degree of freedom handle and many more.

Now there is another domestic startup company that has entered this field and has made a mobile VR interactive solution based on a mobile phone camera. This Beijing startup is called Ying Meiji and its product is called Hand CV. Compared with the aforementioned interactive solutions, the biggest feature of the Hand CV is its lightness. No extra hardware is required. It is only implemented through the camera and software of the mobile phone, and it is free to authorize.

Light gesture interactionThe so-called light gesture interaction is naturally relative to heavy gesture interaction. According to Ying Yuge, CEO of Ingeegge, Leap Motion and uSens are heavy gesture interactions. They not only require additional hardware assistance, but also consume power and performance. The demand is greater.

Specific to the actual function, the heavy gestures interactively track the movements of the two-handed finger joints, hoping to reproduce your hands in a virtual world, as demonstrated by a Demo of Leap Motion in the above diagram. The gesture-based interaction of Hand CV is through different gestures to achieve basic operations such as selection, clicking, and dragging, as demonstrated in the following video.

Video: Hand CV Monocular Gesture Recognition Demo

For example, with Leap Motion you can pick up an object in the virtual world with your hand and throw it out, but you can't do this with Hand CV, because the monocular camera can't get precise depth information, although through the image The recognition algorithm can also calculate blurry depth data.

According to the official introduction, the technology used by Hand CV is:

The screen is captured by an ordinary camera and palm detection and segmentation are performed frame by frame. For the segmented information, the target detection object is extracted by a k-cos clustering algorithm, and finally the extracted features are used as the data for gesture recognition. The gesture recognition part uses a hidden Markov model to repeatedly train a large number of identification sample data.

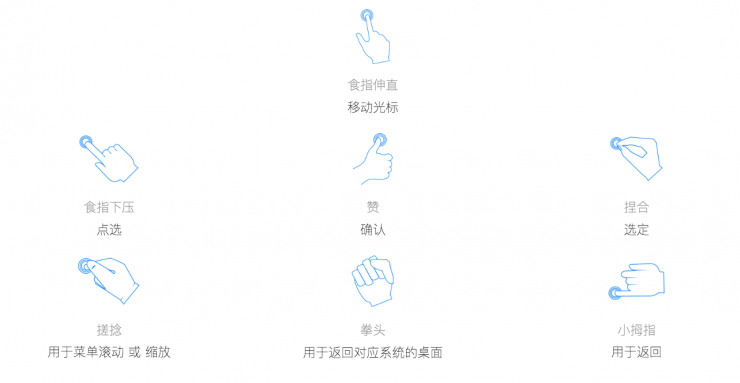

The Hand CV 2.0 version is expected to support several types of gestures (temporary) as shown in the figure below, and Zhu Yucong said that manufacturers can customize gestures according to their needs.

This product will first support iOS devices, mainly because Apple's hardware is relatively stable. In terms of power consumption, it consumes 8% to 10% of performance for the A8 processor device. In order to reduce power consumption, it draws a frame of the camera every 2 to 3 seconds in the "standby" state, and uses 12 frames per second when used.

The company plans to launch a product demo on the App Store in September. It will also release the SDK. The official version is expected to be released by the end of this year and early next year.

Have you caught the pain of moving VR?After Xiao Bian experienced the experience of Hand CV, he found that the overall operation was very simple and started quickly. Only a few gestures need to be remembered, and the speed and accuracy of gesture recognition were good.

In promoting this product, Ingeeggie uses a free license model, and the vendors that are collaborating include Youku and Havisi. Content vendors can simply put the Hand CV into their own app, allowing VR mobile phone box users to achieve gesture control. The video aspect is playing, pausing, and dragging, while the game is usually targeted at light interactive games such as cards.

However, this technology will also encounter problems encountered by all computer vision (CV) technologies. Whether it is monocular or binocular cameras, it may not be recognized in extremely dim, strong light and noisy environments. The problem. In this regard, Zhu Yucong said that they are building a library with 100,000 gesture models, hoping to enhance the robustness of technology through deep learning.

Zhu Yucong said that Yingmeiji’s goal is to become a supplier of ARVR's basic interactive technology, and he believes that VR is only one piece. In the future, its technology will be much more valuable in terms of AR. At present, the company has licensed technology to AR glasses manufacturers.

In addition to basic interactions, Zhu Yucong also revealed that the company is working with MasterCard to develop VR payment products, mainly gestures and passwords. In addition, the company also hopes to apply the technology to products such as automobiles and robots.

In terms of income, Yingmeiji does not plan to make money from ARVR content vendors, but hopes to consider profitability after gaining enough market share. At the same time, the company is also cooperating with intellectual property agency companies and hopes to earn revenues by doing some technical licensing, such as letting mobile phone manufacturers put their own technology into their products.

Yingmeiji was founded in 2014 by CEO Zhu Yugle and CTO Li Xiaobo. He has been engaged in the development of computer vision technology. Previously, he developed AR shopping products, but this year he has devoted his efforts to Hand CV.

For the next direction, Zhu Yucong said that gesture recognition should be done first, and other computer vision technologies will be explored, but there is no other product direction. He said that technology is not for the sake of showing off technology, but that it has good application scenarios. In addition, he believes that the value of Slam to start-up companies is over and it is too late to do so now.

The reason for betting on Hand CV, Zhu Yucong believes that because this product has captured the pain point of users of mobile VR experience. He believes that light interaction is a single-purpose market, heavy interaction is the market for the handle, and based on depth camera gesture tracking, its positioning is wrong and there will not be enough markets.

In the sense of immersion, using gestures to replace the current mobile phone box through the "Turn + click a button" or "Turn + touchpad" interaction design looks like a good solution, but whether it can be recognized by the user, also Need for further observation after product launch.