After examining a patient's biological tissue sample, the pathologist's report is usually the gold diagnostic criteria for many diseases. Especially for cancer, the diagnosis of pathologists has a profound effect on the treatment of patients. Pathological section review is a very complex task that requires years of training to do well, and a wealth of expertise and experience is also essential. Despite training

After examining a patient's biological tissue sample, the pathologist's report is usually the gold diagnostic criteria for many diseases. Especially for cancer, the diagnosis of pathologists has a profound effect on the treatment of patients. Pathological section review is a very complex task that requires years of training to do well, and a wealth of expertise and experience is also essential. Although all are trained, there may be substantial differences in the diagnosis given by the same pathologist to the same patient, which may lead to misdiagnosis. For example, in the diagnosis of certain types of breast cancer, the consistency of the diagnosis is as low as 48%, and the consistency of prostate cancer diagnosis is also low.

It is not uncommon to have low diagnostic consistency, because if you want to make an accurate diagnosis, you must check a lot of information. Pathologists are usually only responsible for reviewing all visible biological tissue on a slice. However, each patient may have many pathological sections, assuming digitally sliced ​​images at 40x magnification, with image data for each patient exceeding 1 billion pixels. Imagine traversing a 10 megapixel photo and being responsible for the judgment of each pixel. Needless to say, there is too much data to check here, and time is often limited.

In order to solve the problem of limited diagnosis time and inconsistent diagnosis results, we are studying how to make the field of deep learning digital pathology work, and provide an auxiliary tool in the pathologist's workflow by creating an automatic detection algorithm. Google Research uses image data from the Radboud University Medical Center to train diagnostic algorithms. These images are also used in the 2016 ISBI Camelyon Challenge, which has been optimized to target the spread of breast cancer to adjacent breast nodes.

In the breast cancer diffusion positioning task, the use of off-the-shelf standard deep learning methods such as IncepTIon (also known as GoogLeNet), the performance is also quite good, although the generated tumor probability predicts that the heat map still has noise. We have made enhanced customizations to this training network, including training the models with different magnification images (much like pathologists do). From the training results, we are likely to train a system whose capabilities can Equivalent to a pathologist, and may even exceed the performance of pathologists, and it has unlimited time to examine pathological sections.

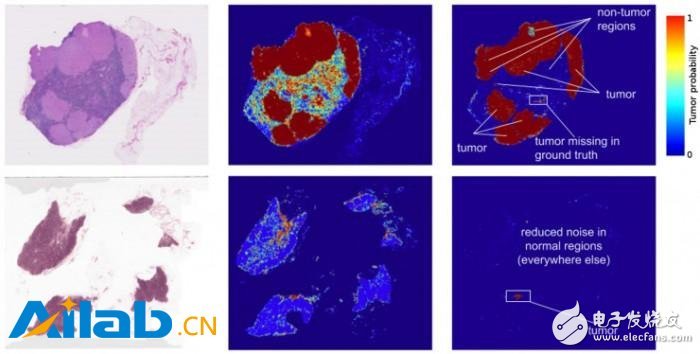

The left image of Figure 1 is an image from two lymph node biopsies. The middle image is the result of an early deep learning algorithm for detecting tumors. The right image is our current work. Note that the visible noise (potential misjudgment) of the second version has been reduced.

In fact, the predicted heat map generated by the algorithm has improved a lot. The algorithm's localization score (FROC) is 89%, which is significantly higher than that of pathologists without time constraints. Their score is only 73%. We are not the only group that believes this approach is promising, and other groups of algorithm models scored up to 81% in the same data set. What's even more exciting for us is that our models are very robust and can be identified from images obtained from different hospitals using different scanners. For more details, see the Google Research article "Detecting Cancer Metastasis on Gigapixel Pathology Images."

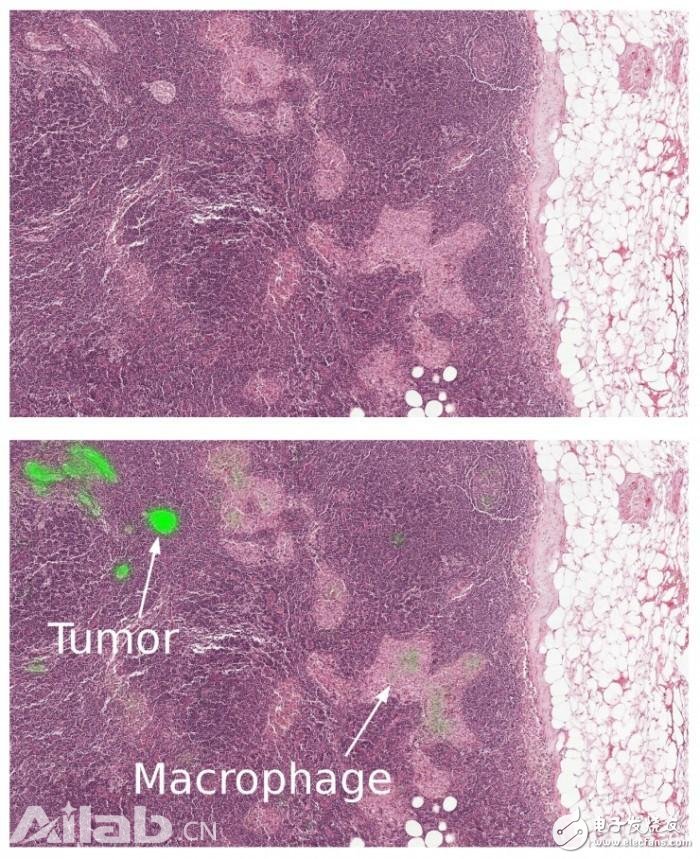

Figure 2 Close-up of a lymph node biopsy. Tissues contain breast cancer metastases as well as macrophages, which appear to be similar to tumors but are benign normal tissues. Our algorithm successfully identifies the tumor area (bright green) and is not disturbed by macrophages.

While these results seem exciting, there are a few important considerations to consider:

Like most indicators, localized FROC scores are not perfect. Here, the FROC score is defined as the sensitivity with a small number of false positives. The false positive refers to the misidentification of normal tissue as a tumor, and the sensitivity is the percentage of tumors detected per slide. However, pathologists rarely make false positives, for example, the above 73% score corresponds to 73% sensitivity and zero false positive. In contrast, the sensitivity of our algorithm can be improved by assuming more false positives. If each slide allows 8 false positives, our algorithm's sensitivity can reach 92%.

These algorithms perform well in performing trained tasks, but lack a wealth of knowledge and experience compared to human pathologists. Human pathologists can detect abnormal classifications that the model has not yet trained, such as inflammatory processes, autoimmune diseases, or other types of cancer.

To ensure that patients get the best clinical results, these algorithms need to be integrated into their workflow as an aid to pathologists. We envision our algorithm to improve the diagnostic efficiency of the pathologist and the consistency of the diagnostic results. For example, a pathologist can reduce the false negative rate by focusing on the top tumor prediction area and up to 8 false positive areas per slide. The false negative is the percentage of undetected tumors. In addition, these algorithms allow pathologists to accurately measure tumor size, which correlates with tumor predictions.

Training models are just the first step in turning interesting research into real products. From clinical validation to regulatory approval, there are still many difficulties that need to be conquered. But we have already started a very promising beginning, and we hope that by sharing our work, we can accelerate progress in this area.

Automotive Split Braided Wire Loom

Description of Automotive Split Braided Sleeve Loom For Cable And Wire Wrap

1. Braided Wrap Around Sleeving offers innovative solutions for the protection of breakout areas

and provides easy removal when is necessary an inspection or maintenance of cables.

2. The special open structure allows to be installed after other components, for example copper terminals and connectors.

PET Wrap provides the same flexibility and abrasion resistance as the PET Expandable Braided Sleeving but with the added feature of a hook and loop closure running the length of the material.

This allows you to work on segments of the cabling, rather than having to remove the entire covering and re-run all of its contents.

Technical data of self wrap split cable sleeve

1. Material: Polyester

2. Operating range: - 50ºC - +150ºC

3. Melt Point: 250ºC+-5ºC

4. Flammability: VW-1

Sizes for self closing sleeves:

sleeve diameter(mm): 5,8,10,13,16,19,25,29,32,38,50,60,70.

Automotive Connector Wiring Harness ,Harness Wiring Loom Wiring ,Automotive Split Braided Wire Loom,Automotive Self Split Cable Sleeving

Shenzhen Huiyunhai Tech.Co.,Ltd , https://www.hyhbraidedsleeve.com